Sustainability has gained tremendous mindshare across the world. Energy conservation and CO2 emissions reductions are among the key initiatives for environmental sustainability. Since data centers contributed to as much as 1% global electricity usage there is a meaningful impact in optimizing power consumption in data centers.

In the cloud computing era, cloud native sustainability spans many areas and touches upon almost every aspect of system architecture, ranging from chip design all the way to application development.

Here at Red Hat, the Emerging Technologies Sustainability Team focuses on the cloud native sustainability, technologies, projects and methodologies. Specifically, we are exploring how to provide end users the insight into the energy consumption of their workloads. This will enable innovations in energy efficient workload scheduling, tuning and scaling.

To this end, we are proud to present Project Kepler, the community-driven open source project that aims to export workload energy consumption across a wide range of platforms.

Note: Red Hat’s Emerging Technologies blog includes posts that discuss technologies that are under active development in upstream open source communities and at Red Hat. We believe in sharing early and often the things we’re working on, but we want to note that unless otherwise stated the technologies and how-tos shared here aren’t part of supported products, nor promised to be in the future.

KEPLER: Kubernetes-based Efficient Power Level Exporter

Kepler started as a collaboration between Red Hat OCTO and IBM Research. This project is based on prior research on runtime system power consumption estimation, i.e. using CPU performance counters and machine learning (ML) models to estimate power consumption of workloads.

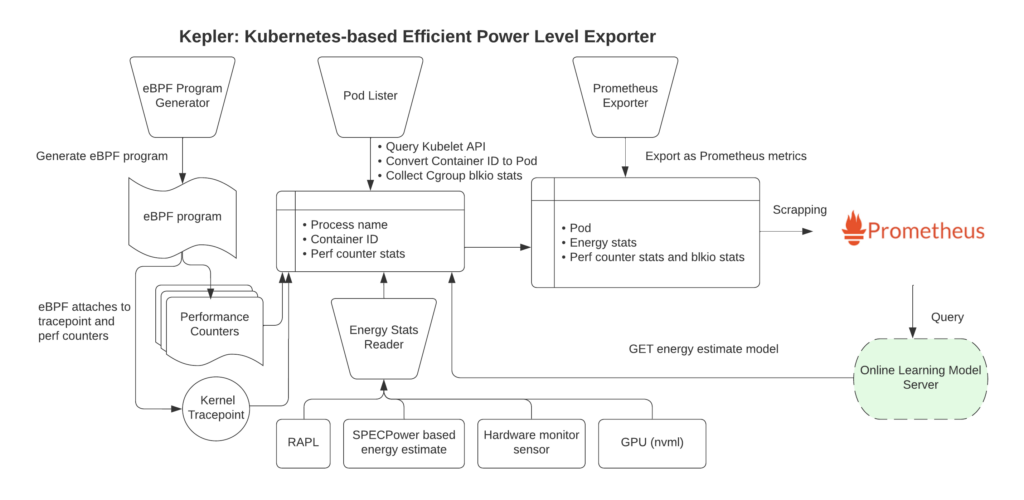

Kepler leverages eBPF programs to probe per-container energy consumption related to system counters and exports them as metrics. These metrics help end users monitor their containers’ energy consumption and help cluster administrators make intelligent decisions towards achieving their energy conservation goals.

You can read more about the design and architecture of Kepler here. Check us out on GitHub.

Kepler Model Server

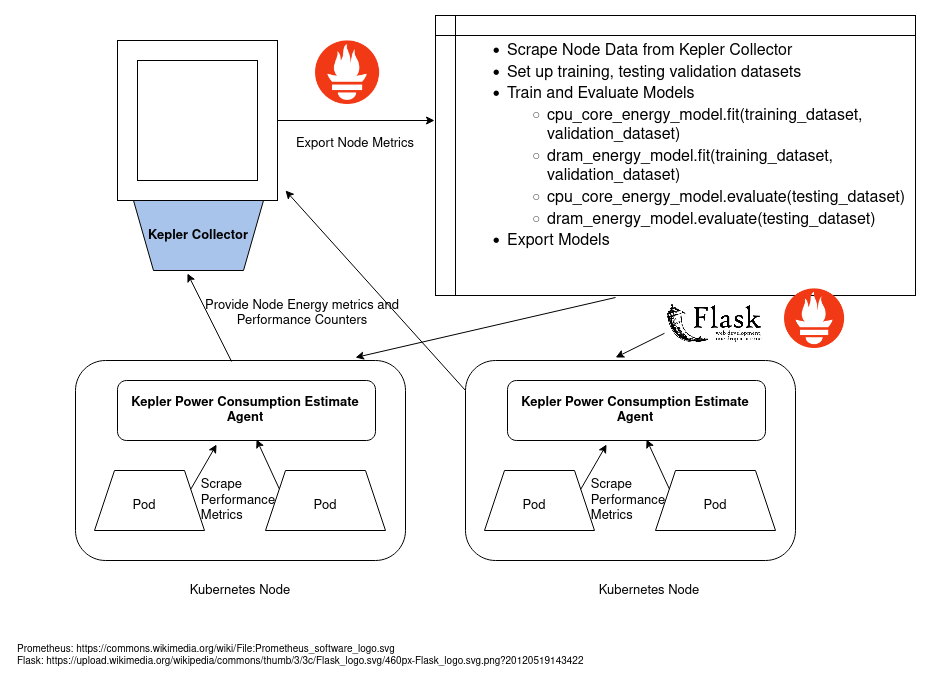

The Kepler Model Server is an internal program that provides Kepler with ML models for estimating power consumption on Kubernetes workloads.

The Kepler Model Server pre-trains its models with node energy statistics (labels) and node performance counters (features) as Prometheus metrics on a variety of different Kubernetes clusters and workloads. Once the models achieve an acceptable performance level, Kepler Model Server exports them via flask routes and Kepler can then access them to calculate per-pod energy consumption metrics given per-pod performance counters.

Unlike other similar projects, the Kepler Model Server also continuously trains and tunes its pre-trained models using node data scraped by Kepler’s Power Estimate Agents from client clusters. This gives Kepler the ability to further adapt its pod energy consumption calculation capabilities to serve clients’ unique systems.

To learn more about the Kepler model server, check out:

Looking ahead

We recognize that when it comes to modeling power consumption in the cloud, all of these investigations must be based on scientific research — and the methodologies and algorithms that we use must be transparent and open. With project Kepler and the technologies that we are developing to support it, we are hoping to engage a global community in working to reduce the environmental impact of our increasingly cloud-driven global economy. We look forward to collaborating with you!